Image by Author

# Introduction

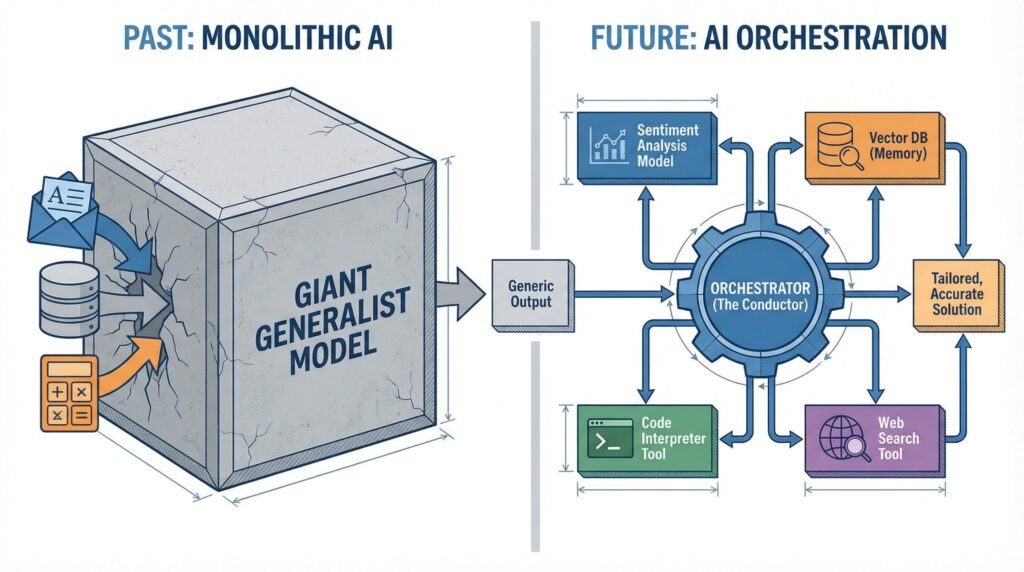

For the past two years, the AI industry has been locked in a race to build ever-larger language models. GPT-4, Claude, Gemini: each promising to be the singular solution to every AI problem. But while companies competed to create the biggest brain, a quiet revolution was happening in production environments. Developers stopped asking “which model is best?” and started asking “how do I make multiple models work together?”

This shift marks the rise of AI orchestration, and it’s changing how we build intelligent applications.

# Why One AI Can’t Rule Them All

The dream of a single, all-powerful AI model is appealing. One API call, one response, one bill. But reality has proven more complex.

Consider a customer service application. You need sentiment analysis to gauge customer emotion, knowledge retrieval to find relevant information, response generation to craft replies, and quality checking to ensure accuracy. While GPT-4 can technically handle all these tasks, each requires different optimization. A model trained to excel at sentiment analysis makes different architectural tradeoffs than one optimized for text generation.

The breakthrough isn’t in building one model to rule them all. It’s in coordinating multiple specialists.

This mirrors a pattern we’ve seen before in software architecture. Microservices replaced monolithic applications not because any single microservice was superior, but because coordinated specialized services proved more maintainable, scalable, and effective. AI is having its microservices moment.

# The Three-Layer Stack

Understanding modern AI applications requires thinking in layers. The architecture that’s emerged from production deployments looks remarkably consistent.

// The Model Layer

The Model Layer sits at the foundation. This includes your LLMs, whether GPT-4, Claude, local models like Llama, or specialized models for vision, code, or analysis. Each model brings specific capabilities: reasoning, generation, classification, or transformation. The key insight is that you’re no longer choosing one model. You’re composing a collection.

// The Tool Layer

The Tool Layer enables action. Language models can think but can’t do anything on their own. They need tools to interact with the world. This layer includes web search, database queries, API calls, code execution environments, and file systems. When Claude “searches the web” or ChatGPT “runs Python code,” they’re using tools from this layer. The Model Context Protocol (MCP), recently released by Anthropic, is standardizing how models connect to tools, making this layer increasingly plug-and-play.

// The Orchestration Layer

The Orchestration Layer coordinates everything. This is where the intelligence of your system actually lives. The orchestrator decides which model to invoke for which task, when to call tools, how to chain operations together, and how to handle failures. It’s the conductor of your AI symphony.

Models are musicians, tools are instruments, and orchestration is the sheet music that tells everyone when to play.

# Orchestration Frameworks: Understanding the Patterns

Just as React and Vue standardized frontend development, orchestration frameworks are standardizing how we build AI systems. But before we discuss specific tools, we need to understand the architectural patterns they represent. Tools come and go. Patterns endure.

// The Chain Pattern (Sequential Logic)

The Chain Pattern (Sequential Logic) is orchestration’s most basic pattern. Think of it as a data pipeline where each step’s output becomes the next step’s input. User question, retrieve context, generate response, validate output. Each operation happens in sequence, with the orchestrator managing the handoffs. LangChain pioneered this pattern and built an entire framework around making chains composable and reusable.

The strength of chains lies in their simplicity: you can reason about the flow, debug step-by-step, and optimize individual stages. The limitation is rigidity. Chains don’t adapt based on intermediate results. If step two discovers the question is unanswerable, the chain still marches through steps three and four. But for predictable workflows with clear stages, chains work well.

// The RAG Pattern (Retrieval-First Logic)

The RAG Pattern (Retrieval-First Logic) emerged from a specific problem: language models hallucinate when they lack information. The solution is simple: retrieve relevant information first, then generate responses grounded in that data.

But architecturally, RAG represents something deeper: Just-in-Time Context Injection. Think of it as the separation of Compute (the LLM) from Memory (the Vector Store). The model itself remains static. It doesn’t learn new facts. Instead, you swap what’s in the model’s “RAM” by injecting relevant context into its prompt window. You’re not retraining the brain. You’re giving it access to the exact information it needs, precisely when it needs it.

This architectural principle (Query, Search knowledge base, Rank results by relevance, Inject into context, Generate response) works because it turns a generative problem into a retrieval plus synthesis problem, and retrieval is more reliable than generation.

What makes this a lasting pattern rather than just a technique is this separation of concerns. The model handles reasoning and synthesis. The vector store handles memory and recall. The orchestrator manages the injection timing. LlamaIndex built its entire framework around optimizing this pattern, handling the hard parts of document chunking, embedding generation, vector storage, and retrieval ranking. You can see how RAG works in practice even with simple no-code tools.

// The Multi-Agent Pattern (Delegation Logic)

The Multi-Agent Pattern (Delegation Logic) represents orchestration’s most sophisticated evolution. Instead of one sequential flow or one retrieval step, you create specialized agents that delegate to each other. A “planner” agent breaks down complex tasks. “Researcher” agents gather information. “Analyst” agents process data. “Writer” agents produce output. “Critic” agents review quality.

CrewAI exemplifies this pattern, but the concept predates the tool. The architectural insight is that complex intelligence emerges from coordination between specialists, not from one generalist trying to do everything. Each agent has a narrow responsibility, clear success criteria, and the ability to request help from other agents. The orchestrator manages the delegation graph, ensuring agents don’t loop infinitely and work progresses toward the goal. If you want to dive deeper into how agents work together, check out key agentic AI concepts.

The choice between patterns isn’t about which is “best.” It’s about matching pattern to problem. Simple, predictable workflows? Use chains. Knowledge-intensive applications? Use RAG. Complex, multi-step reasoning requiring different specializations? Use multi-agent. Production systems often combine all three: a multi-agent system where each agent uses RAG internally and communicates through chains.

The Model Context Protocol deserves special mention as the emerging standard underneath these patterns. MCP isn’t a pattern itself but a universal protocol for how models connect to tools and data sources. Released by Anthropic in late 2024, it’s becoming the foundation layer that frameworks build upon, the HTTP of AI orchestration. As MCP adoption grows, we’re moving toward standardized interfaces where any pattern can use any tool, regardless of which framework you’ve chosen.

# From Prompt to Pipeline: The Router Changes Everything

Understanding orchestration conceptually is one thing. Seeing it in production reveals why it matters and exposes the component that determines success or failure.

Consider a coding assistant that helps developers debug issues. A single-model approach would send code and error messages to GPT-4 and hope for the best. An orchestrated system works differently, and its success hinges on one critical component: the Router.

The Router is the decision-making engine at the heart of every orchestrated system. It examines incoming requests and determines which pathway through your system they should take. This isn’t just plumbing. Routing accuracy determines whether your orchestrated system outperforms a single model or wastes time and money on unnecessary complexity.

Let’s return to our debugging assistant. When a developer submits a problem, the Router must decide: Is this a syntax error? A runtime error? A logic error? Each type requires different handling.

How an Intelligent Router acts as a decision engine to direct inputs to specialized pathways | Image by Author

Syntax errors route to a specialized code analyzer, a lightweight model fine-tuned for parsing violations. Runtime errors trigger the debugger tool to examine program state, then pass findings to a reasoning model that understands execution context. Logic errors require a different path entirely: search Stack Overflow for similar issues, retrieve relevant context, then invoke a reasoning model to synthesize solutions.

But how does the Router decide? Three approaches dominate production systems.

Semantic routing uses embedding similarity. Convert the user’s question into a vector, compare it to embeddings of example questions for each route, and send it down the path with highest similarity. Fast and effective for clearly distinct categories. The debugger uses this when error types are well-defined and examples are plentiful.

Keyword routing examines explicit signals. If the error message contains “SyntaxError,” route to the parser. If it contains “NullPointerException,” route to the runtime handler. Simple, fast, and surprisingly solid when you have reliable indicators. Many production systems start here before adding complexity.

LLM-decision routing uses a small, fast model as the Router itself. Send the request to a specialized classification model that’s been trained or prompted to make routing decisions. More flexible than keywords, more reliable than pure semantic similarity, but adds latency and cost. GitHub Copilot and similar tools use variations of this approach.

Here’s the insight that matters: The success of your orchestrated system depends 90% on Router accuracy, not on the sophistication of your downstream models. A perfect GPT-4 response sent down the wrong path helps no one. A decent response from a specialized model routed correctly solves the problem.

This creates an unexpected optimization target. Teams obsess over which LLM to use for generation but neglect Router engineering. They should do the opposite. A simple Router making correct decisions beats a complex Router that’s frequently wrong. Production teams measure routing accuracy religiously. It’s the metric that predicts system success.

The Router also handles failures and fallbacks. What if semantic routing isn’t confident? What if the web search returns nothing? Production Routers implement decision trees: try semantic routing first, fall back to keyword matching if confidence is low, escalate to LLM-decision routing for edge cases, and always maintain a default path for truly ambiguous inputs.

This explains why orchestrated systems consistently outperform single models despite added complexity. It’s not that orchestration magically makes models smarter. It’s that accurate routing ensures specialized models only see problems they’re optimized to solve. A syntax analyzer only analyzes syntax. A reasoning model only reasons. Each component operates in its zone of excellence because the Router protected it from problems it can’t handle.

The architecture pattern is universal: Router at the front, specialized processors behind it, orchestrator managing the flow. Whether you’re building a customer service bot, a research assistant, or a coding tool, getting the Router right determines whether your orchestrated system succeeds or becomes an expensive, slow alternative to GPT-4.

# When to Orchestrate, When to Keep It Simple

Not every AI application needs orchestration. A chatbot that answers FAQs? Single model. A system that classifies support tickets? Single model. Generating product descriptions? Single model.

Orchestration makes sense when you need:

Multiple capabilities that no single model handles well. Customer service requiring sentiment analysis, knowledge retrieval, and response generation benefits from orchestration. Simple Q&A doesn’t.

External data or actions. If your AI needs to search databases, call APIs, or execute code, orchestration manages those tool interactions better than trying to prompt a single model to “pretend” it can access data.

Reliability through redundancy. Production systems often chain a fast, cheap model for initial processing with a capable, expensive model for complex cases. The orchestrator routes based on difficulty.

Cost optimization. Using GPT-4 for everything is expensive. Orchestration lets you route simple tasks to cheaper models and reserve expensive models for hard problems.

The decision framework is straightforward: start simple. Use a single model until you hit clear limitations. Add orchestration when the complexity pays for itself in better results, lower costs, or new capabilities.

# Final Thoughts

AI orchestration represents a maturation of the field. We’re moving from “which model should I use?” to “how should I architect my AI system?” This mirrors every technology’s evolution, from monolithic to distributed, from choosing the best tool to composing the right tools.

The frameworks exist. The patterns are emerging. The question now is whether you’ll build AI applications the old way (hoping one model can do everything) or the new way: orchestrating specialized models and tools into systems that are greater than the sum of their parts.

The future of AI isn’t in finding the perfect model. It’s in learning to conduct the orchestra.

Vinod Chugani is an AI and data science educator who bridges the gap between emerging AI technologies and practical application for working professionals. His focus areas include agentic AI, machine learning applications, and automation workflows. Through his work as a technical mentor and instructor, Vinod has supported data professionals through skill development and career transitions. He brings analytical expertise from quantitative finance to his hands-on teaching approach. His content emphasizes actionable strategies and frameworks that professionals can apply immediately.