Image by Editor

# Introduction

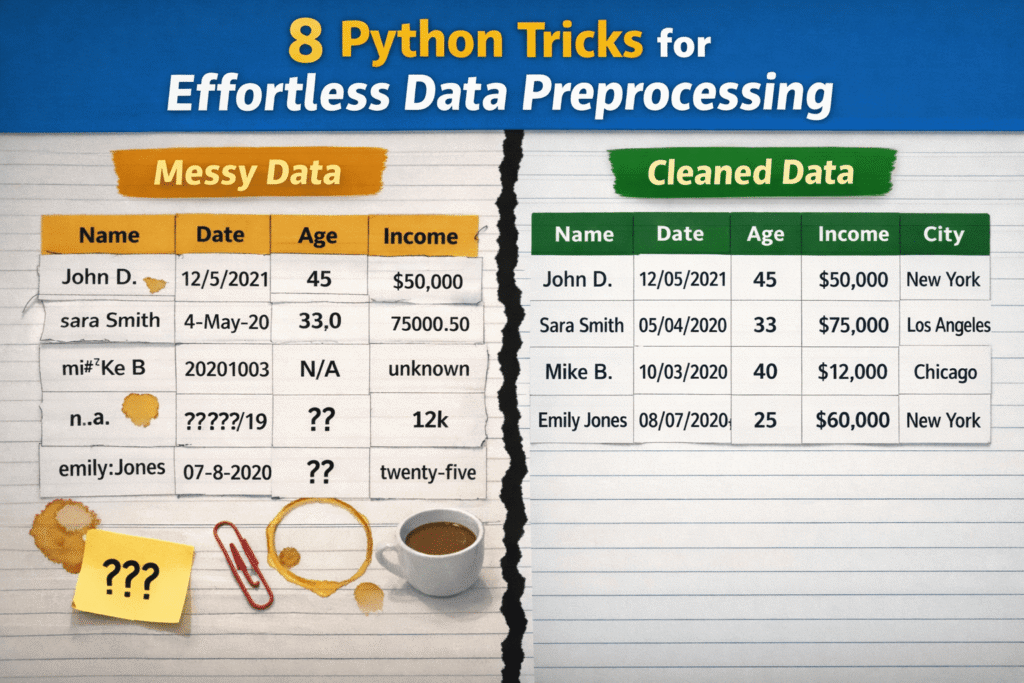

While data preprocessing holds substantial relevance in data science and machine learning workflows, these processes are often not conducted correctly, largely because they are perceived as overly complex, time-consuming, or requiring extensive custom code. As a result, practitioners may delay essential tasks like data cleaning, rely on brittle ad-hoc solutions that are unsustainable in the long run, or over-engineer solutions to problems that might be simple at their core.

This article presents 8 Python tricks to turn raw, messy data into clean, neatly preprocessed data with minimal effort.

Before looking at the specific tricks and accompanying code examples, the following preamble code sets up the necessary libraries and defines a toy dataset to illustrate each trick:

import pandas as pd

import numpy as np

# A tiny, intentionally messy dataset

df = pd.DataFrame({

” User Name “: [” Alice “, “bob”, “Bob”, “alice”, None],

“Age”: [“25”, “30”, “?”, “120”, “28”],

“Income$”: [“50000”, “60000”, None, “1000000”, “55000”],

“Join Date”: [“2023-01-01”, “01/15/2023”, “not a date”, None, “2023-02-01”],

“City”: [“New York”, “new york “, “NYC”, “New York”, “nyc”],

})

# 1. Normalizing Column Names Instantly

This is a very useful, one-liner style trick: in a single line of code, it normalizes the names of all columns in a dataset. The specifics depend on how exactly you want to normalize your attributes’ names, but the following example shows how to replace whitespaces with underscore symbols and lowercase everything, thereby ensuring a consistent, standardized naming convention. This is important to prevent annoying bugs in downstream tasks or to fix possible typos. No need to iterate column by column!

df.columns = df.columns.str.strip().str.lower().str.replace(” “, “_”)

# 2. Stripping Whitespaces from Strings at Scale

Sometimes you may only want to ensure that specific junk invisible to the human eye, like whitespaces at the beginning or end of string (categorical) values, is systematically removed across an entire dataset. This strategy neatly does so for all columns containing strings, leaving other columns, like numeric ones, unchanged.

df = df.apply(lambda s: s.str.strip() if s.dtype == “object” else s)

# 3. Converting Numeric Columns Safely

If we are not 100% sure that all values in a numeric column abide by an identical format, it is generally a good idea to explicitly convert these values to a numeric format, turning what could sometimes be messy strings looking like numbers into actual numbers. In a single line, we can do what otherwise would require try-except blocks and a more manual cleaning procedure.

df[“age”] = pd.to_numeric(df[“age”], errors=”coerce”)

df[“income$”] = pd.to_numeric(df[“income$”], errors=”coerce”)

Note here that other classical approaches like df[‘columna’].astype(float) could sometimes crash if invalid raw values that cannot be trivially converted into numeric were found.

# 4. Parsing Dates with errors=”coerce”

Similar validation-oriented procedure, distinct data type. This trick converts date-time values that are valid, nullifying those that are not. Using errors=”coerce” is key to tell Pandas that, if invalid, non-convertible values are found, they must be converted into NaT (Not a Time), instead of generating an error and crashing the program during execution.

df[“join_date”] = pd.to_datetime(df[“join_date”], errors=”coerce”)

# 5. Fixing Missing Values with Smart Defaults

For those unfamiliar with strategies to handle missing values other than dropping entire rows containing them, this strategy imputes those values — fills the gaps — using statistically-driven defaults like median or mode. An efficient, one-liner-based strategy that can be adjusted with different default aggregates. The [0] index accompanying the mode is used to obtain only one value in case of ties between two or several “most frequent values”.

df[“age”] = df[“age”].fillna(df[“age”].median())

df[“city”] = df[“city”].fillna(df[“city”].mode()[0])

# 6. Standardizing Categories with Map

In categorical columns with diverse values, such as cities, it is also necessary to standardize names and collapse possible inconsistencies for obtaining cleaner group names and making downstream group aggregations like groupby() reliable and effective. Aided by a dictionary, this example applies a one-to-one mapping on string values related to New York City, ensuring all of them are uniformly denoted by “NYC”.

city_map = {“new york”: “NYC”, “nyc”: “NYC”}

df[“city”] = df[“city”].str.lower().map(city_map).fillna(df[“city”])

# 7. Removing Duplicates Wisely and Flexibly

The key for this highly customizable duplicate removal strategy is the use of subset=[“user_name”]. In this example, it is used to tell Pandas to deem a row as duplicated only by looking at the “user_name” column, and verifying whether the value in the column is identical to the one in another row. A great way to ensure every unique user is represented only once in a dataset, preventing double counting and doing it all in a single instruction.

df = df.drop_duplicates(subset=[“user_name”])

# 8. Clipping Quantiles for Outlier Removal

The last trick consists of capping extreme values or outliers automatically, instead of entirely removing them. Specially useful when outliers are assumed to be due to manually introduced errors in the data, for instance. Clipping sets the extreme values falling below (and above) two percentiles (1 and 99 in the example), with such percentile values, keeping original values lying between the two specified percentiles unchanged. In simple terms, it is like keeping overly large or small values within the limits.

q_low, q_high = df[“income$”].quantile([0.01, 0.99])

df[“income$”] = df[“income$”].clip(q_low, q_high)

# Wrapping Up

This article illustrated eight useful tricks, tips, and strategies that will boost your data preprocessing pipelines in Python, making them more efficient, effective, and robust: all at the same time.

Iván Palomares Carrascosa is a leader, writer, speaker, and adviser in AI, machine learning, deep learning & LLMs. He trains and guides others in harnessing AI in the real world.